Latisha Besariani

—

Timeline

4 Weeks, March 2025

Tools

Google Colab, Python, Huggingface

Overview

Turnsense is a lightweight end-of-utterance (EOU) detector built for real-time voice AI. It runs on SmolLM2‑135M, tuned for low-power devices like Raspberry Pi, and hits solid accuracy without sacrificing speed.

EOU detection helps conversational systems figure out when someone’s actually done speaking, beyond what Voice Activity Detection (VAD) can offer. Turnsense does this by looking at the text itself: patterns, structure, and meaning.

The model’s open-source—check out the repo if you're curious.

How I Made It

One afternoon I was tinkering with my TARS‑AI voice assistant on a Raspberry Pi and noticed that something just felt off, the conversations weren’t flowing naturally. Voice Activity Detection (VAD) clearly wasn’t enough, I wasn't even finish speaking and TARS suddenly turned off because it didn't detect any voice when i was thinking of what to reply. So I started scouring the web to figure out how to make turn‑taking feel more human, and that’s when I came across LiveKit’s turn detector model, which I think had just come out around January. It was built on SmolLM2‑135M, but their license locks it into LiveKit Agents, so I couldn’t use it in TARS‑AI.

I was frustrated, so I dove into the MultiWOZ dataset, tried annotating 20K examples with an LLM, and hit a wall at 60% accuracy: the raw, noisy and inconsistent LLM‑annotated data just didn’t translate. After a late‑night face‑palm, I ended up hand curating a 2K‑sample set of punctuated user sentences, trimmed the context window to the last utterance only, and fine‑tuned SmolLM2‑135M with a LlamaForSequenceClassification head. To keep training feasible on my limited compute, I used LoRA adapters which is only a few million extra parameters to tune, so I could squeeze everything into a single Colab GPU in under an hour.

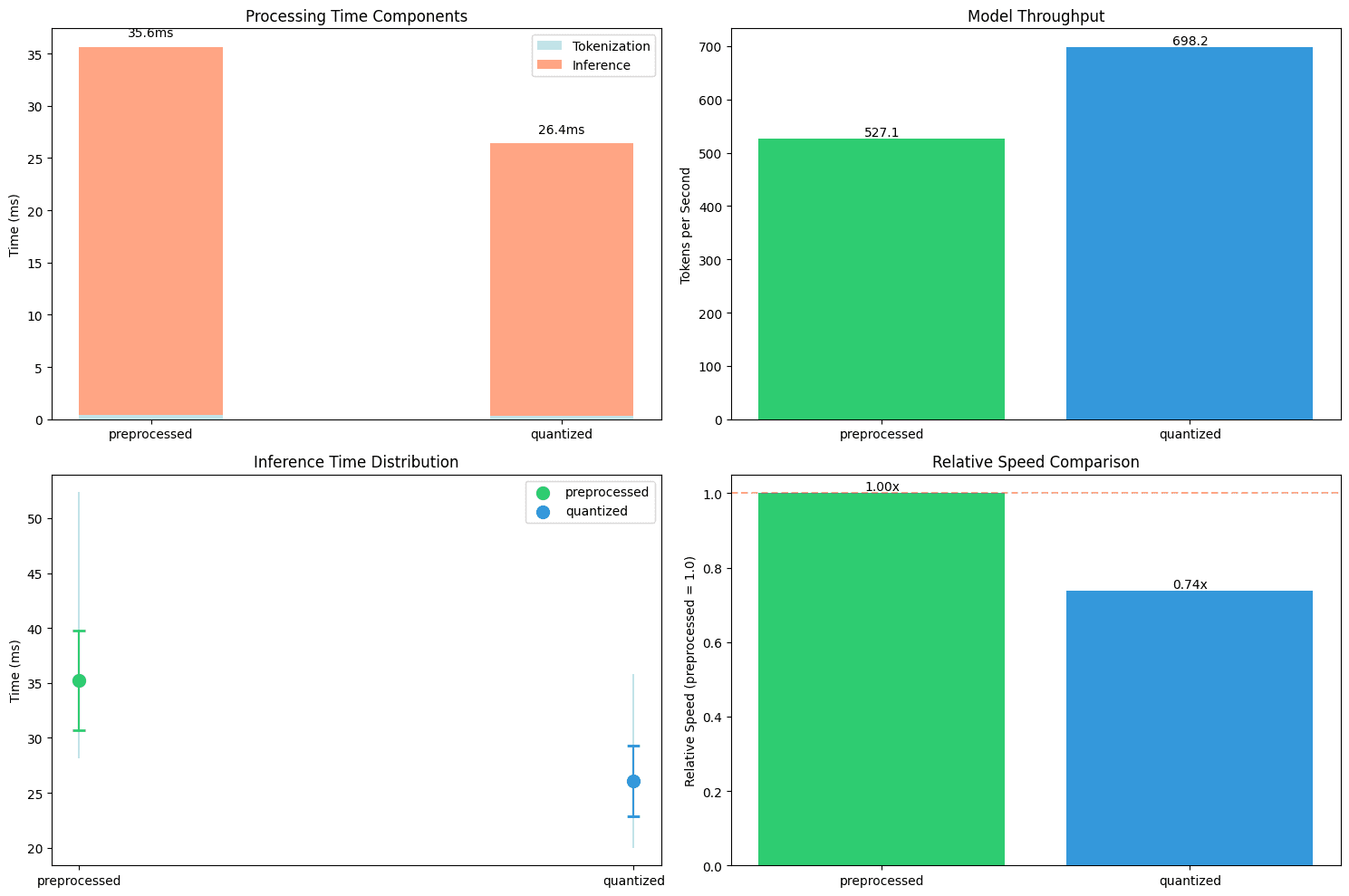

Honestly, I just picked sequence classification because it made sense for a yes/no task like “Is this the end?”, rather than chasing next‑token predictions. Maybe LiveKit had better reasons to go with LlamaForCausalLM, especially since SmolLM2 was probably trained with a prompt‑based objective. I haven’t really dug into what that output difference means yet, but if I ever explore causal LM heads, I’ll probably need to rethink how I tokenize and prep the data too. For now, this setup gets me where I need to be: 97.5% accuracy (93.75% after 8‑bit quantization) and solid real‑time performance on the Pi.

But one thing I noticed from my architecture is that it’s entirely text-based, so it misses a lot of cues and depends heavily on the quality of the STT output. You can’t really tell from just “Hello” whether someone’s done or about to keep going. So maybe in the future, I’d like to explore a multimodal approach to handle that: audio, prosody, or something else. I don’t know how yet, but I’ll figure it out.

Today Turnsense is a tiny, lean model that actually knows when to hand back the mic. It’s been a wild four‑week sprint; from wrestling with noisy LLM annotations to reverse‑engineering LiveKit’s hints, to fine‑tuning on Colab and pushing benchmarks on a Pi, and it’s only the beginning. Next up: experimenting with hybrid heads, adding prosody cues, and maybe even a dash of multilingual flair.